R Code Optimization I: Foundations and Principles

Series of Posts on Code Optimization

This is the first post of a small series focused on code optimization.

Here I draw from my experience developing scientific software in both academia and industry to share practical techniques and tools that help streamline R workflows without sacrificing clarity.

- This post lays down some of the whats and whys of code optimization.

- The second post focuses on code design and readability.

- The third post goes deep into vectorization, parallelization, and memory management.

- The final post covers profiling, benchmarking, and the optimization workflow

Let’s dig in!

A Fine Balance

At some point everyone writes a regrettably sluggish piece of junk code. It is just a matter of when?, and there is no shame in that.

At this point, the simplest way to make our junk code is simple enough: throw more money at our cloud provider, or upgrade our rig, and get MORE!

More cores, more RAM, more POWER! Because who doesn’t love bragging about that shit? I surely do!

More cores, more RAM, more POWER! Because who doesn’t love bragging about that shit? I surely do!

On the other hand, money is expensive (duh!), and

intensive computing has a serious environmental footprint. With this in mind, it’s also good to remember that

we went to the Moon and back on less than 4kb of RAM, so there must be a way to make our junk code run in a more sustainable manner.

This is where code optimization comes into play!

Optimizing code is about making it efficient for developers, users, and machines alike. For us, pitiful carbon-based blobs, readable code is easier to wield, ergo efficient. For a machine, efficient code runs fast and has a small memory footprint.

And there is an inherent tension there!

Optimizing for computational performance alone often comes at the cost of readability, while clean, readable code can sometimes slow things down. That’s why optimization requires making some strategic choices.

Before diving headfirst into code optimization, it’s crucial to understand the dimensions of code efficiency and when code optimization is actually worth it.

The Dimensions of Code Efficiency

Code efficiency involves a complex web of causes and effects. Unveiling the whole thing bare here is beyond the scope of this post, but I believe that understanding some of the foundations may help articulate successful code optimization strategies.

Let’s take a look at the diagram below.

On the left, there are several major code features that we can tweak to improve (or worsen!) code efficiency. Changes in these features are bound to shape the efficiency landscape of our code in often divergent ways.

On the left, there are several major code features that we can tweak to improve (or worsen!) code efficiency. Changes in these features are bound to shape the efficiency landscape of our code in often divergent ways.

Making choices

There’s four main code features we can tweak to improve efficiency:

- The programming language we choose.

- How simple and readable our code is.

- The algorithm design and data structures we use.

- How well we exploit hardware utilization: vectorization, parallelization, memory management, and all that jazz.

We’ll dive deep into each of these in the next articles of this series.

Living with the consequences

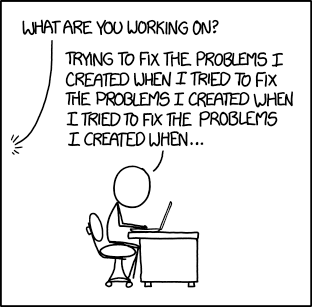

The code we write has a life-cycle with a recurrent stage known as I’m gonna ruin your day (again), which can be triggered by meatbags and machines alike.

With meatbags I mean users and yourself. Users, right? Clear docs and clean APIs may keep the good ones at bay. But in the end they’ll find ways to motivate you to go back to whatever your cute past self coded, and you might find yourself regretting things.

Machines don’t lie: they will let you know when your code is slow, uses too much memory, or handles files and connections badly. These issues not only degrade the user’s experience, they also may limit the code’s ability to adapt to larger workloads (scalability) and increase its energy footprint and running cost.

Taking all that in mind, it’s easy to see that optimization is a multidimensional trade-off: improving one aspect often degrades others. For example, speeding up execution might increase memory usage while making the code less readable, or parallelization can create I/O bottlenecks. There’s rarely a single “best” solution, only trade-offs based on context and constraints.

To Optimize Or Not To Optimize

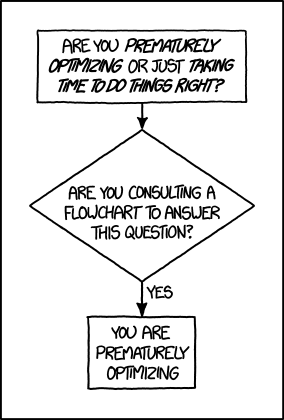

Don’t fret if you find yourself in this Shakespearean conundrum, because the First Commandment of Code Optimization says:

“Thou shall not optimize thy code.”

If your code is reasonably simple and works as expected, you can call it a day and move on, because there is no reason whatsoever to attempt any optimization. This idea aligns well with a principle enunciated long ago:

“Premature optimization is the root of all evil.” — Donald Knuth - The Art of Computer Programming

Premature optimization happens when we let performance considerations get in the way of our code design. Designing code is a taxing task already, and designing code while trying to make it efficient at once is even harder! Having a non-trivial fraction of our mental bandwidth focused on optimization results in code more complex than it should be, and increases the chance of introducing bugs.

That said, there are legitimate reasons to break the first commandment. Maybe you are bold enough to publish your code in a paper (Reviewer #2 says hi), releasing it as package for the community, or simply sharing it with your data science team. In these cases, the Second Commandment comes into play.

“Thou shall make thy code simple.”

Optimizing code for simplicity aims to make it readable, maintainable, and easy to use and debug. In essence, this commandment ensures that we optimize the time required to interact with the code. Any code that saves the time of users and maintainers is efficient enough already!

The next post in this series will elaborate on code simplicity. In the meantime, let’s imagine we have a clean and elegant code that runs once and gets the job done, great! But what if it must run thousands of times in production? Or worse, what if a single execution takes hours or even days? In these cases, optimization shifts from a nice-to-have to a requirement. Yep, there’s a commandment for this too:

“Thou shall optimize wisely.”

At this point you might be at the ready, fingers on the keyboard, about to deface your pretty code for the sake of sheer performance. Just don’t. This is a great point to stop, go back to the whiteboard, and think carefully about what you want need to do. You gotta be smart about your next steps!

Here are a couple of ideas that might help you get smart about optimization.

First, keep the Pareto Principle in mind! It says that, roughly, 80% of the consequences result from 20% of the causes. When applied to code optimization, this principle translates into a simple fact: most performance issues are produced by a small fraction of the code. From here, the best course of action requires identifying these critical code blocks and focusing our optimization efforts on them. Once you’ve identified the real bottlenecks, the next step is making sure that optimization doesn’t introduce unnecessary complexity.

Second, beware of over-optimization. Taking code optimization too far can do more harm than good! Over-optimization happens when we keep pushing for marginal performance gains at the expense of clarity. It often results in convoluted one-liners and obscure tricks to save milliseconds that will confuse future you while making your code harder to maintain. Worse yet, excessive tweaking can introduce subtle bugs.

In short, optimizing wisely means knowing when to stop. A clear, maintainable solution that runs fast enough is often better than a convoluted one that chases marginal gains.

Beyond these important points, there is no golden rule to follow here. Optimize when necessary, but never at the cost of clarity!